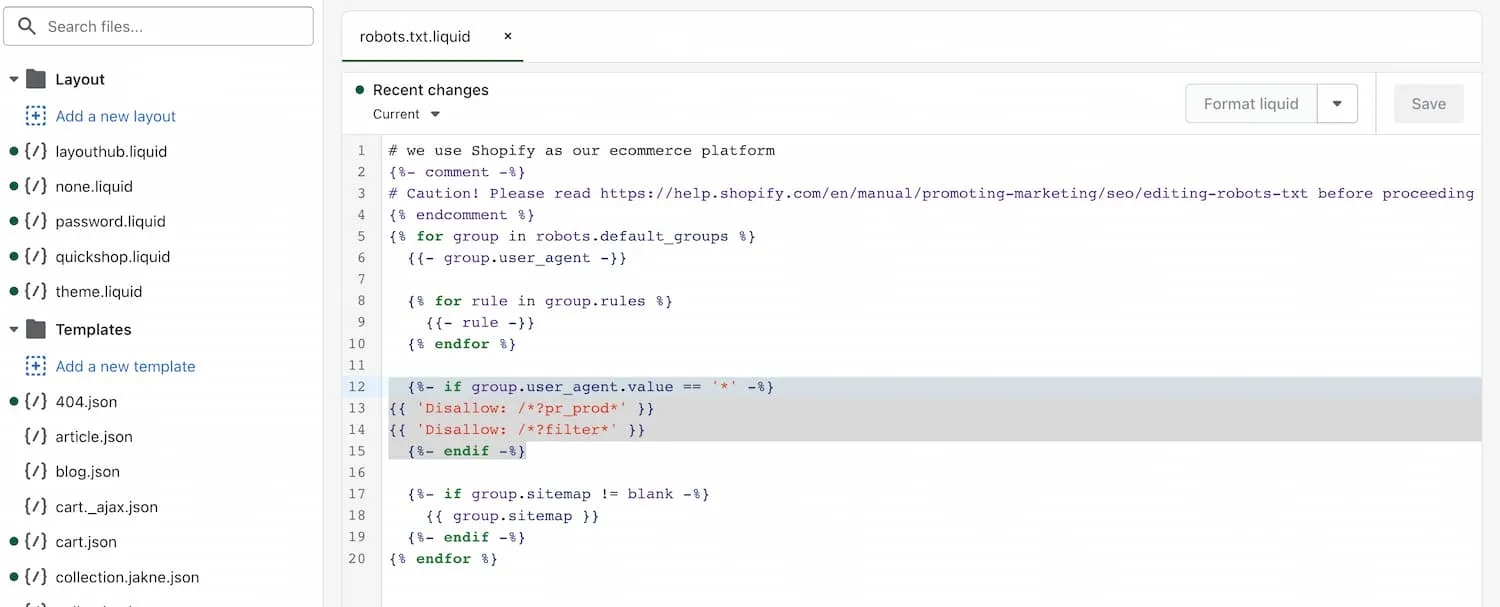

Quick fix:

Add the following to your robots.txt

{%- if group.user_agent.value == '*' -%}

{{ 'Disallow: /*?pr_prod*' }}

{{ 'Disallow: /*?filter*' }}

{%- endif -%}Millions of indexed pages? What?

Recently I was contacted by a store owner with a very weird SEO problem.

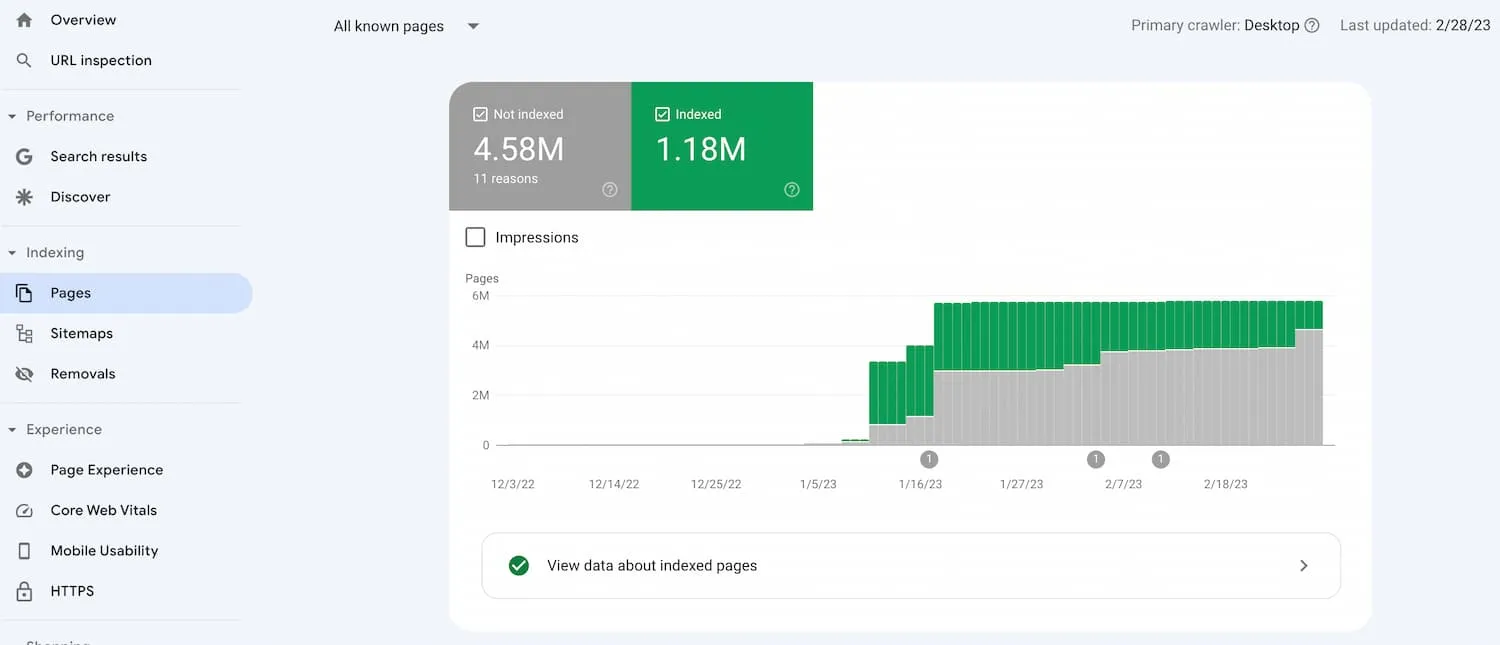

Google Search Console was showing over 1 MILLION indexed pages, and 4.5 MILLION non-indexed pages.

He only had about 200 products in reality.

In Google Search Console we can see millions of non-existing pages are being indexed

But is this a bad thing? You might ask. What’s wrong with too many pages being indexed?

It would be more of a problem if they were not indexed, right?

Crawl budget is being used up

If you have a website with 10k+ pages, Google can have trouble finding them all.

Google only wants to spend a limited amount of time and resources to crawl your site. This is known as Crawl Budget.

So if your site has millions of pages, it might not crawl all of them. Or it will crawl them eventually, but certainly not as fast as if you had only your real 200 pages.

It also could misunderstand the importance of each page.

And duplicate content

The issue was Google seeing pages with parameters as separate pages (I’ll elaborate below).

With so many pages with the same content, this would eventually come up as a duplicate content issue.

What’s causing so many indexed pages?

I suspected a weird app at first, but no.

These issues are inherent to Shopify’s filtering system, and the way it tracks recommended product clicks.

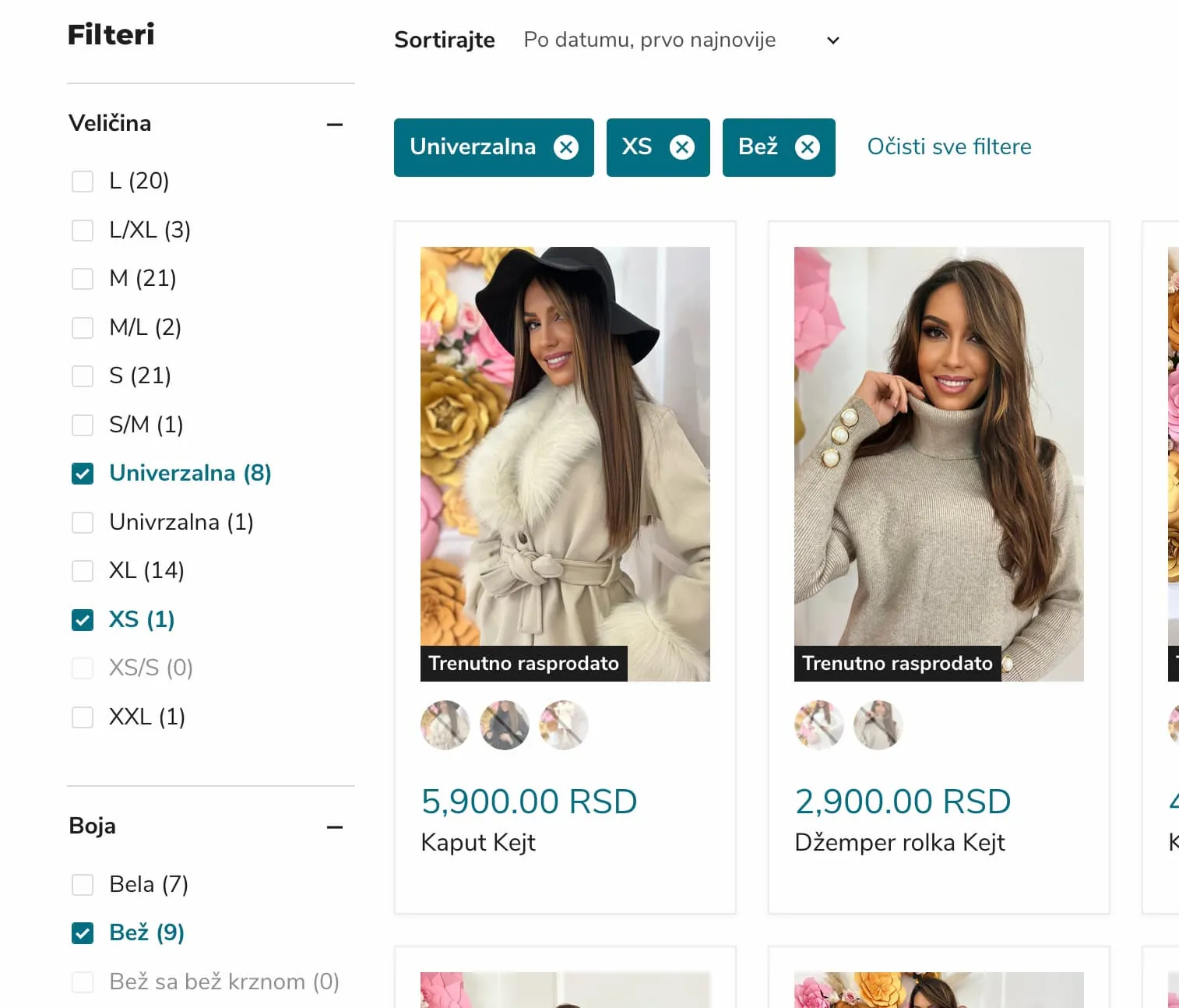

1. Filtered collections in every possible combination

When you filter a collection page in Shopify, you may notice the URL changes to show the currently selected filters.

By selecting several filters, you can see there is a large number of possible combinations - in the thousands.

And each of these combinations creates a new URL.

Currently selected filters creates a URL with these parameters:

?filter.v.option.boja=Bež&filter.v.option.veličina=Univerzalna&filter.v.option.veličina=XS&view=view-24&grid\_list=grid-view

Google is aware of all these URL’s. And that’s fine, but we don’t want them to be indexed!

We only want the canonical (naked) version of the page to be indexed e.g. /collection/collection-title.

But Google decided these were the best URL’s to index.

So we need to de-index them.

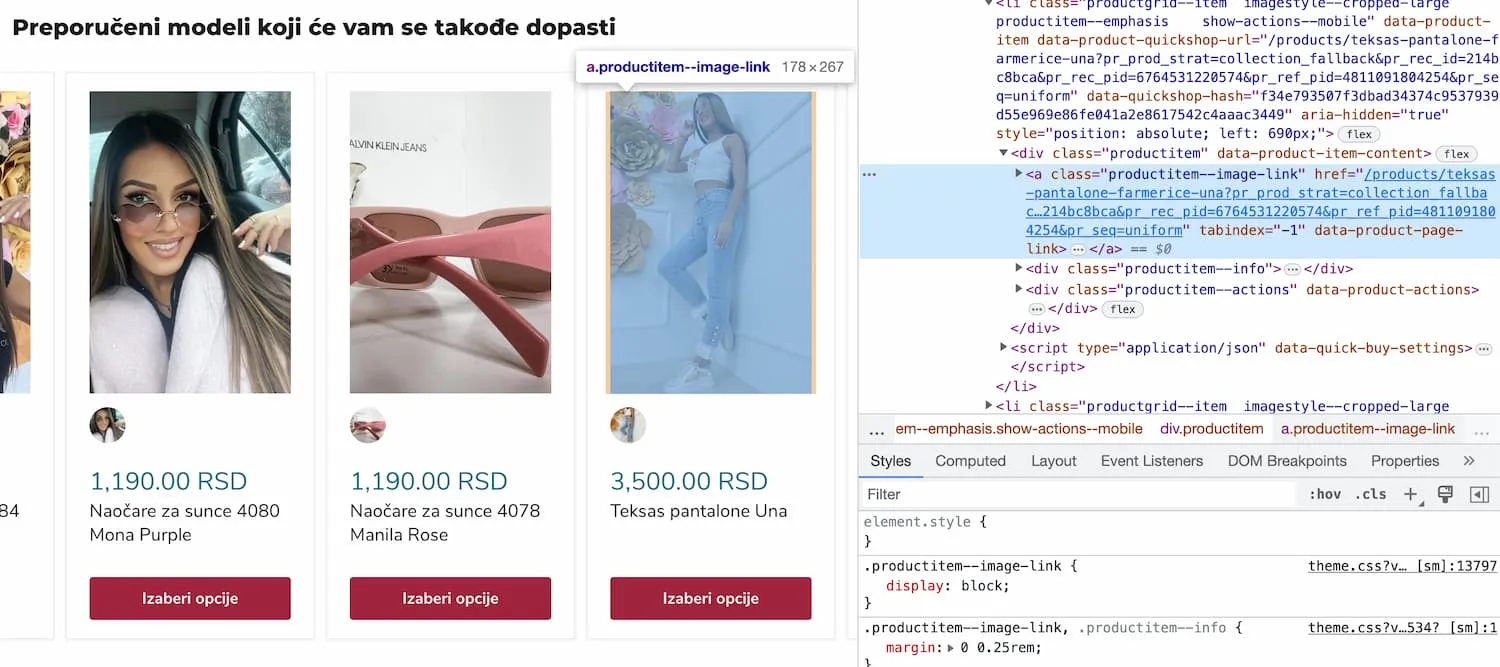

2. Recommended product links in every possible combination

You’re probably using the recommended products section at the bottom of each product page.

This is usually a great way to get some internal linking across your site.

Shopify also gives you analytics for these links, directly on the Analytics page in your Shopify admin. Which can be useful to understand customer behaviour.

But these analytics cause a big SEO problem!

Each related product link is not using the canonical link. They are using a link with heaps of parameters on the end.

Note: Parameters are text on the end of a URL, usually after a question mark (?) symbol. For example: ?pr_prod_strat=use_description&pr_rec_id=…..

Here I’m inspecting the Recommended Products section at the bottom of a product page. We see the links have parameters.

Example:

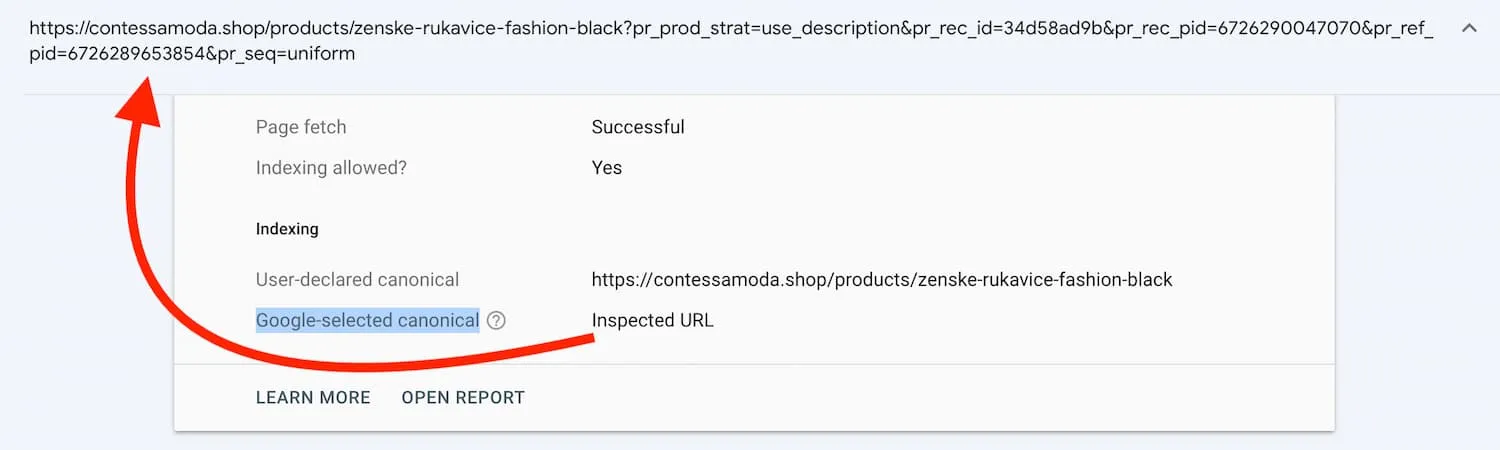

Here is one of the indexed URLs:

https://contessamoda.shop/products/zenske-rukavice-fashion-black?pr_prod_strat=use_description&pr_rec_id=34d58ad9b&pr_rec_pid=6726290047070&pr_ref_pid=6726289653854&pr_seq=uniform

Here is the real product URL:

https://contessamoda.shop/products/zenske-rukavice-fashion-black

This is actually the correct way to track recommended products in Shopify. You will see many themes doing exactly this.

Aren’t canonicals supposed to help?

Google doesn’t always use the canonical that you provided.

If I check the source code in these wrongly indexed pages, I can see the correct canonical code:

<link rel="canonical" href="\[ the correct url \] ">But you can check which URL Google has decided to use.

Simply enter one of your long incorrect URL’s into the Search Console URL inpector.

So for the example product above, Google thinks they are two separate pages.

Even thought the page has the correct canonical meta tag, Google has chosen the incorrect one.

Internal linking causes incorrect canonicals

Google selects the canonical it considers the most suitable. Why did it make such a bad choice?

My guess is that because there are more of these links on your site, than your real product links.

Internal linking is a strong signal, and because the recommended product URL’s are used multiple times on every page, it thinks thats the best canonical url to use.

And because of another issue inherent to Shopify:

Canonical product links are usually shortened product URL’s in the form of /products/product-title.

But internal linking throughout a Shopify site is usually using breadcrumb style URL’s, that look like this: /collections/collection-title/products/product-title

This creates a situation where there are almost no internal links going to the canonical product URL. So Google considers this canonical unimportant.

Why doesn’t this happen on every Shopify store?

This is where I’m confused. Because I haven’t seen this before at all, although I did find a forum post about this issue, but it seems very rare.

Firstly this is an issue with Shopify 2.0. So if you haven’t updated your theme to a Shopify 2.0 theme, you won’t have this issue.

But secondly I think this is an issue that only affects large stores. Maybe there needs to be a certain number of these bad links for Google to consider them as canonicals. Everything works fine on smaller stores.

And since less than 30% of stores have over 100 products, it could just be a rare issue. But one that we will see more of soon.

The Solution?

Luckily the solution is simple. We don’t want Google to index these pages.

We can use robots.txt to block crawling these pages.

We are going to disallow crawling any links containing ?filter and ?pr_prod, these are parts of URLs that are present in all our bad links.

How to modify robots.txt in Shopify

- Go to Online Store > Themes. Click on the 3 dots and then Edit Code

- Inside the code editor, search for “robots”. If nothing comes up, you have to create it.

- To create robots.txt look under the Templates folder and click “Add a new template”. Select Robots.txt

Your new robots.txt.liquid template should be created.

- Yes, it already has some code in it.

- Yes it has a .liquid extension - don’t worry, this get’s compiled to robots.txt as usual when you preview it on your site.

- To preview it, use the URL example.com/robots.txt

Here is the code you need to add:

{%- if group.user_agent.value == '*' -%}

{{ 'Disallow: /*?pr_prod*' }}

{{ 'Disallow: /*?filter*' }}

{%- endif -%}You should add it inside the “for group in robots.default_groups” loop i.e. that is almost everything.

I recommend adding it underneath the “endfor” in “for rule in group.rules”. Here is what the entire file looks like: